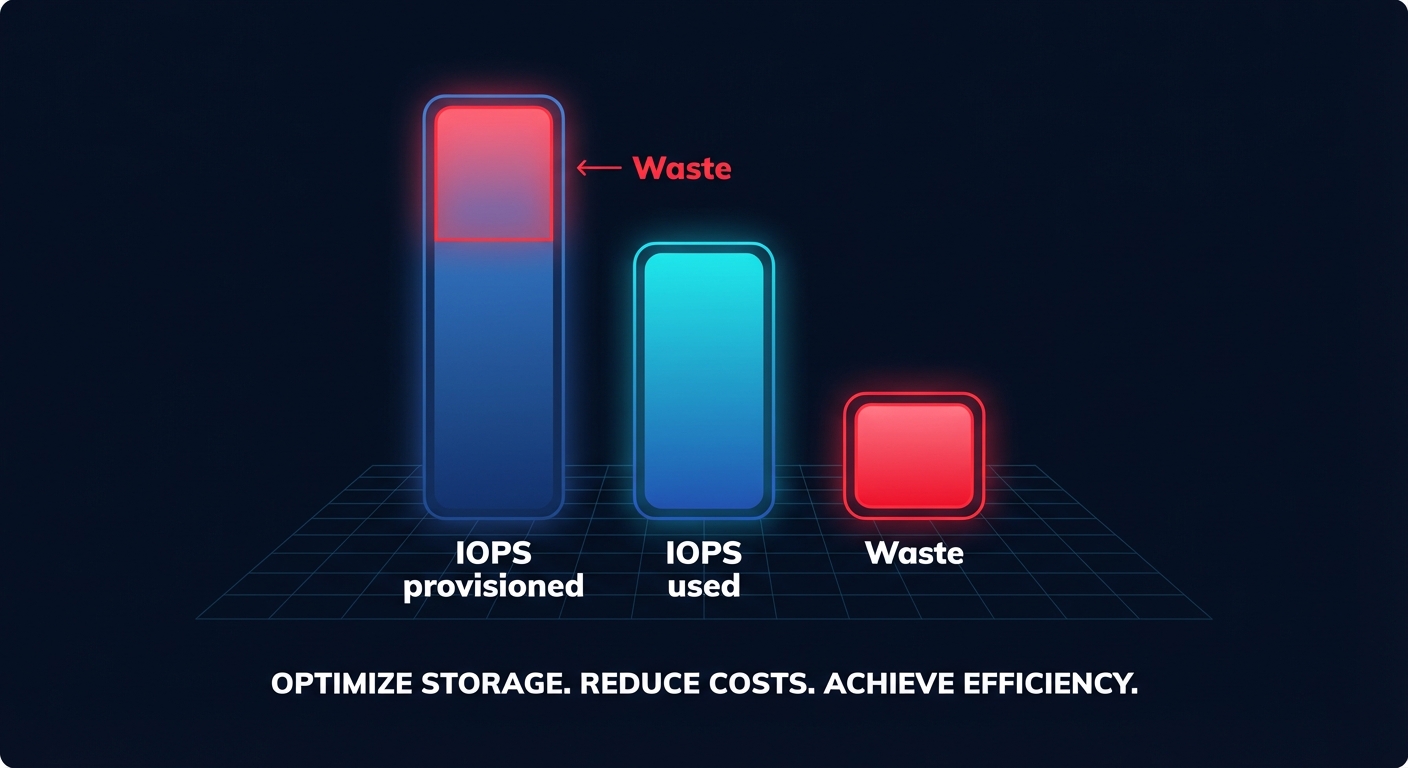

Is your AWS bill climbing because you’re “playing it safe” with storage performance? While Provisioned IOPS ensure databases stay snappy, most teams overprovision by up to 30%, paying for capacity that never hits the disk. Here is how to right-size performance without the waste.

What are AWS IOPS and why do they matter?

Input/Output Operations Per Second (IOPS) is a unit of measurement for the maximum number of reads and writes your Amazon Elastic Block Store (EBS) volume can perform every second. In the AWS ecosystem, not all IOPS are created equal. Your actual performance is heavily dictated by the I/O size of your application’s requests and how they align with the volume’s underlying architecture.

For SSD-backed volumes like gp3 and io2, AWS measures a single I/O operation at a block size of up to 256 KiB. If your application sends a 512 KiB request, AWS counts this as two IOPS. This distinction is critical for AWS EBS performance optimization because a high-throughput workload with large block sizes might hit throughput limits before it ever exhausts its provisioned IOPS. Conversely, random I/O workloads with small block sizes, such as transactional databases, depend almost entirely on high IOPS to maintain low latency.

Decoding EBS volume types: gp3 vs. io2

Choosing the right volume type is the first step toward effective EBS cost optimization. For years, gp2 was the standard, but its reliance on a “burst credit” system meant performance could tank unexpectedly once credits were depleted. This unpredictability led many engineers to overprovision storage just to get higher baseline IOPS.

Today, gp3 serves as the recommended baseline for most transactional workloads. It provides a consistent 3,000 IOPS and 125 MiB/s throughput regardless of volume size, with no burst credits required. Key characteristics of the current generation volumes include:

- gp3 flexibility: You can provision additional IOPS (up to 16,000) and throughput (up to 1,000 MiB/s) independently of storage capacity. Migrating from gp2 to gp3 typically yields a 20% cost reduction while offering more predictable performance.

- io2 precision: For mission-critical applications requiring sub-millisecond latency and 99.999% durability, io2 and io2 Block Express are necessary. These support up to 256,000 IOPS per volume.

- Cost factors: While gp3 costs approximately $0.08 per GB, io2 comes at a premium of $0.125 per GB plus $0.065 per provisioned IOPS.

Understanding this cost-performance tradeoff is essential to avoid paying premium prices for workloads that would perform identically on general-purpose tiers.

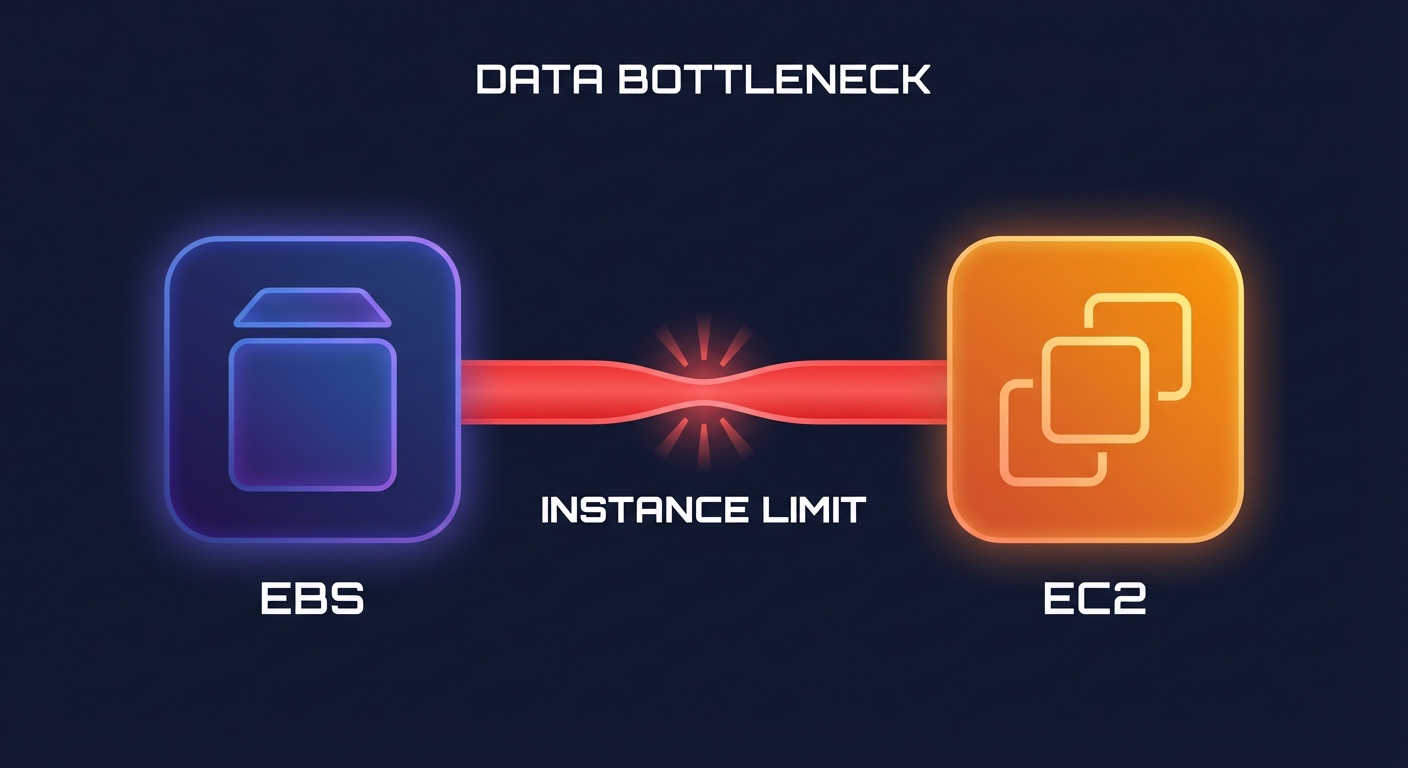

The hidden bottleneck: instance-level limits

A common mistake is provisioning a high-performance volume and attaching it to an undersized EC2 instance. Every EC2 instance has its own EBS-optimized throughput and IOPS limits that act as a hard ceiling, regardless of what you have provisioned on the storage side.

For example, a t3.large instance supports a baseline of only 2,780 IOPS. Even if you provision 50,000 IOPS on an attached io2 volume, the instance will bottleneck the storage performance. To achieve maximum performance – such as the 64,000 IOPS supported by standard io2 volumes – you must use Nitro-based instances that can handle high aggregate I/O. Before tuning your storage, you must right-size your EC2 instances to ensure the communication pipe is wide enough for your data.

Measuring your real-world IOPS demand

To avoid overprovisioning, you must move beyond “best guesses” and analyze actual CloudWatch metrics over a representative period, ideally two to four weeks. You can calculate your actual requirements by summing `VolumeReadOps` and `VolumeWriteOps` and dividing by the number of seconds in the measurement period.

If you find that your 95th percentile usage is consistently 2,000 IOPS, but you are paying for a gp3 volume provisioned at 10,000 IOPS, you are effectively paying for idle capacity. You should also monitor `VolumeQueueLength`. If your IOPS are maxed out and the queue length is consistently above one per 1,000 provisioned IOPS, your application will experience increased EBS latency. This indicates you are bottlenecked and actually require more provisioned capacity, rather than just more storage space.

Automating the path to 40% savings

Manual rightsizing is a losing battle in dynamic environments. Workloads shift and engineering teams rarely have the time to audit thousands of volumes to implement automated AWS rightsizing. When teams provision “just in case,” the resulting waste can account for 20% to 30% of your total EBS spend.

Hykell changes this equation by operating on a performance-first philosophy. The platform continuously monitors your AWS EBS throughput and IOPS usage to identify underutilized resources. By identifying these gaps and automating the migration from legacy gp2 volumes to the more efficient gp3 tier, Hykell helps organizations reduce their cloud costs by up to 40% on autopilot.

Because the Hykell fee model is based purely on the savings generated – if you don’t save, you don’t pay – there is no financial risk to ensuring your infrastructure is as lean as it is performant. If you are ready to see exactly where your storage spend is leaking, get a free cost assessment today and start optimizing your AWS bill without the manual engineering lift.