Did you know that 40% of EC2 instances run at less than 10% CPU utilization during peak hours? Most engineering teams pay for massive idle “safety buffers” they never actually use. You can reclaim 40% of your compute budget by shifting from static provisioning to automated optimization.

Master the art of rightsizing before committing

The most common mistake in cloud financial management is locking in commitments for infrastructure that is already oversized. Rightsizing serves as the foundation of efficiency, frequently delivering a 35% cost reduction with zero impact on application latency. By analyzing at least two to four weeks of CloudWatch metrics, you can identify instances where average CPU utilization sits below 10%, marking them as prime candidates for downsizing or family migration.

Manual rightsizing often fails at scale because workload needs change frequently, and engineers naturally lean toward 30–50% safety buffers. To solve this, modern teams utilize automated AWS rightsizing to match resources to real-time demand on autopilot. For memory-intensive applications, moving from a standard m5.xlarge to a specialized r6g.large can simultaneously improve performance and lower costs. This proactive approach ensures you only pay for the capacity you actually consume, rather than the capacity you feared you might need.

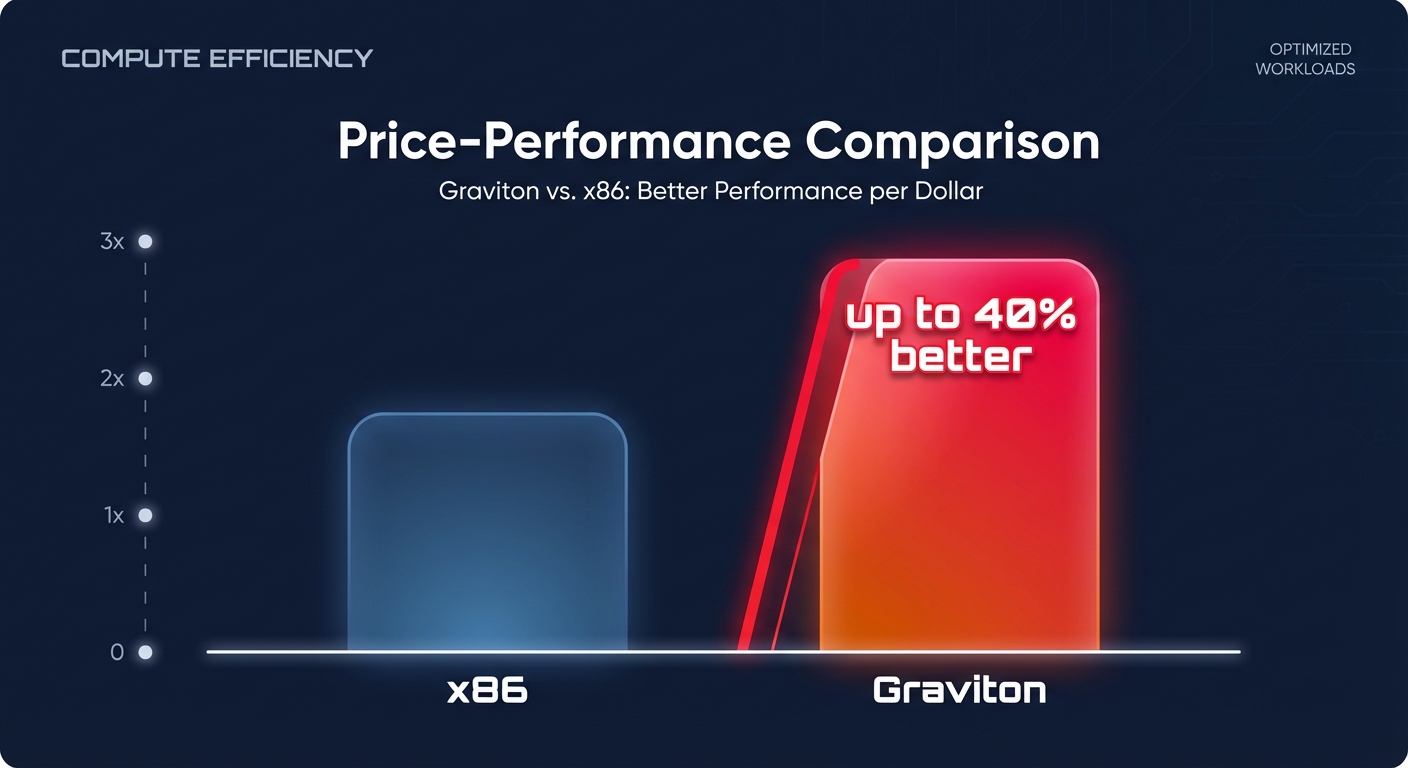

Leverage the Graviton price-performance edge

Migrating to AWS Graviton instances represents one of the fastest ways to improve your unit economics. These ARM-based processors, custom-built by AWS, offer up to 40% better price-performance compared to equivalent x86-based instances. Because Graviton instances cost roughly 20% less per hour than Intel Xeon counterparts, the savings are both immediate and structural. Organizations typically achieve total compute savings reaching 55% when they combine Graviton migration with rightsizing and Savings Plans.

Most containerized applications running on Java, Python, Go, or Node.js are compatible with minimal effort. Hykell helps engineering teams accelerate Graviton gains by identifying compatible workloads and automating the infrastructure changes required for migration. This transition doesn’t just lower your bill; it often results in better throughput and lower latency for microservices and data processing layers, proving that cost reduction doesn’t have to come at the expense of speed.

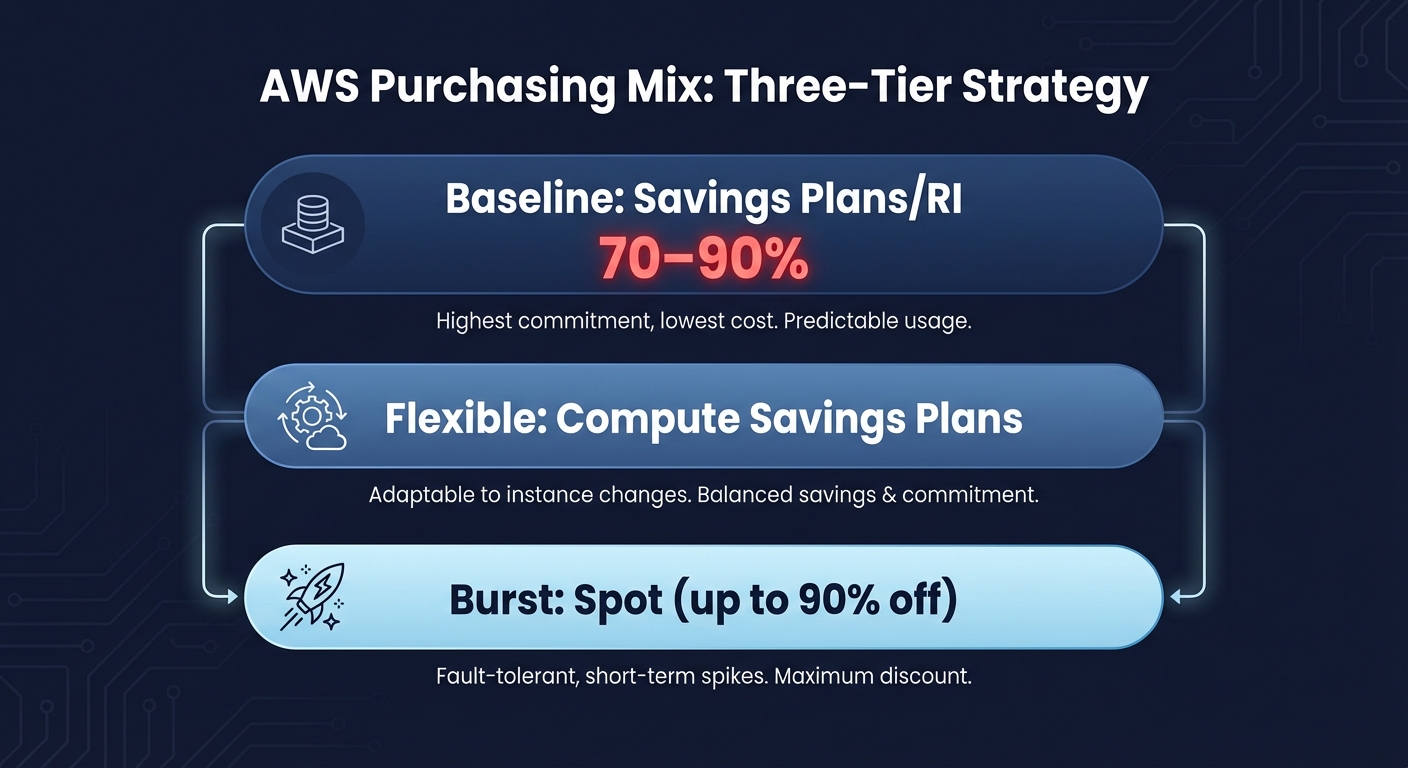

Optimize your purchasing mix for cumulative savings

Relying solely on On-Demand pricing is a recipe for budget overruns, yet over-committing to rigid plans creates its own financial risk. To maximize efficiency, you should orchestrate a three-tier purchasing strategy that balances stability with flexibility. Your baseline tier should cover 70–90% of your steady-state capacity using AWS Savings Plans or Reserved Instances. These instruments provide up to 72% discounts for 3-year commitments, effectively anchoring your costs for 24/7 workloads.

For workloads that shift across regions or instance families, Compute Savings Plans offer a flexible middle layer with up to 66% discounts. Finally, for fault-tolerant workloads like CI/CD builds or stateless web tiers, Spot Instances provide the deepest savings of up to 90%. While Spot instances come with interruption risks, you can maintain production-grade stability by implementing Spot Fleet management to diversify across at least 10 different instance types and multiple availability zones.

Implement intelligent autoscaling patterns

Static fleets are the enemy of cost efficiency. To truly optimize your environment, your EC2 Auto Scaling best practices must move beyond simple CPU thresholds. Target tracking policies that maintain a specific metric, such as a set request count per target, ensure your fleet grows and shrinks in lockstep with actual user behavior. This prevents the “over-scaling” that often happens when metrics are too sensitive or poorly aligned with application performance.

For predictable traffic patterns, such as business-hour spikes for a SaaS platform, scheduled scaling can reduce non-production compute costs by as much as 70% by turning off idle resources overnight. You can further refine this by using predictive scaling to forecast traffic and warm up instances before a rush. This ensures you never trade performance for savings, as the capacity is ready before the demand arrives.

Operationalize savings with Hykell automation

Engineering leaders often lack the bandwidth to manually audit instance types or manage complex commitment portfolios. This is where Hykell transforms your financial outlook by providing automated AWS rate optimization that works in the background. Our platform ensures you always have the best possible discount coverage without the manual effort of buying, selling, or converting reservations as your workloads shift.

Hykell goes beyond simple alerts by continuously monitoring your EBS throughput and EC2 utilization to execute optimizations on autopilot. Because we offer performance-based pricing – where we only take a slice of what we actually save you – there is zero financial risk to your organization. If you don’t save, you don’t pay.

Stop leaving money on the table and start running a leaner, faster AWS environment today. You can calculate your potential savings to see how much you could reclaim, and use Hykell’s observability tools to provide the transparency your FinOps team needs to scale with confidence.