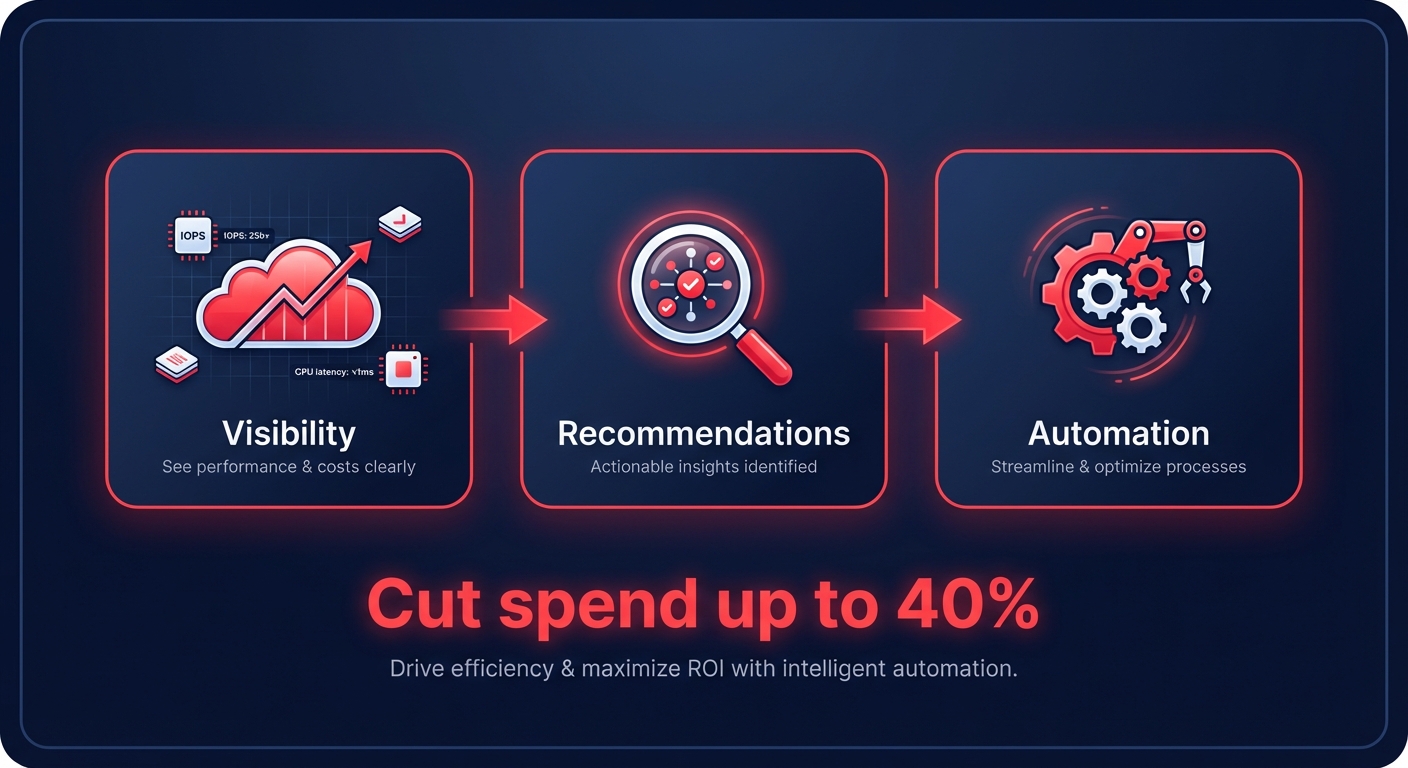

Are you watching your AWS bill climb while engineers spend 15% of their week manually rightsizing? Most enterprises leave 30% of their cloud budget on the table. To cut spend by 40% without hurting performance, you must move beyond visibility toward performance-aware automation.

The gap between cloud visibility and automated execution

Most organizations start their cost management journey with AWS Cloud Cost Explorer. While it provides essential visibility into historical trends and forecasting, it functions largely as a retrospective financial statement rather than an active optimizer. To bridge the gap between knowing you are overspending and actually stopping the bleed, you must evaluate tools based on their observability context, automation depth, and risk mitigation capabilities.

A truly effective cost monitoring strategy requires a tool that correlates spending with real-world performance metrics like IOPS, throughput, and CPU latency. Without this context, you risk downsizing a mission-critical instance that appears idle but actually requires high burst capacity for peak loads. Furthermore, achieving a 40% reduction requires moving away from tools that merely provide “recommendations” and adopting platforms that can execute changes autonomously, such as automated reserved instance management. This automation must be backed by robust rollback protections to ensure that efficiency never comes at the cost of availability.

Evaluating native AWS monitoring tools

AWS offers several native services that are indispensable for a foundational FinOps strategy, yet they often create a high total cost of ownership due to the engineering lift required to manage them. AWS Compute Optimizer uses machine learning to analyze CloudWatch metrics and suggests downsizing for EC2 and Lambda, but it remains a recommendation engine that requires human validation and manual implementation.

The cost optimization pillar within AWS Trusted Advisor is highly effective at identifying “zombie” resources, such as unassociated Elastic IPs or orphaned EBS volumes. While it flags these opportunities, it lacks the native automation to clean them up. To protect against sudden billing spikes caused by misconfigured Lambdas or runaway test environments, automated cost anomaly detection can establish spending baselines and alert your team before a minor error triples your monthly bill. These native tools provide the necessary data, but the “final mile” of execution often remains a manual bottleneck for DevOps teams.

Accelerating gains with Graviton-based savings

One of the most aggressive levers for slashing AWS spend without compromising performance is migrating to AWS Graviton processors. These ARM-based chips offer up to 40% better price-performance over comparable x86 instances. For many engineering leaders, the primary hurdle is software compatibility for Graviton. However, modern runtimes like Python, Java, Node.js, and .NET Core support ARM64 natively, making them prime candidates for immediate migration.

A direct performance comparison between Graviton and Intel often reveals that Graviton-based C7g instances deliver 25% better computational performance while costing 20% less than x86 alternatives. Hykell is designed to help you accelerate your Graviton gains by providing automated workload compatibility assessments and migration planning. This allows your organization to stack Graviton-specific savings on top of your existing Reserved Instances and Savings Plans, compounding your total ROI.

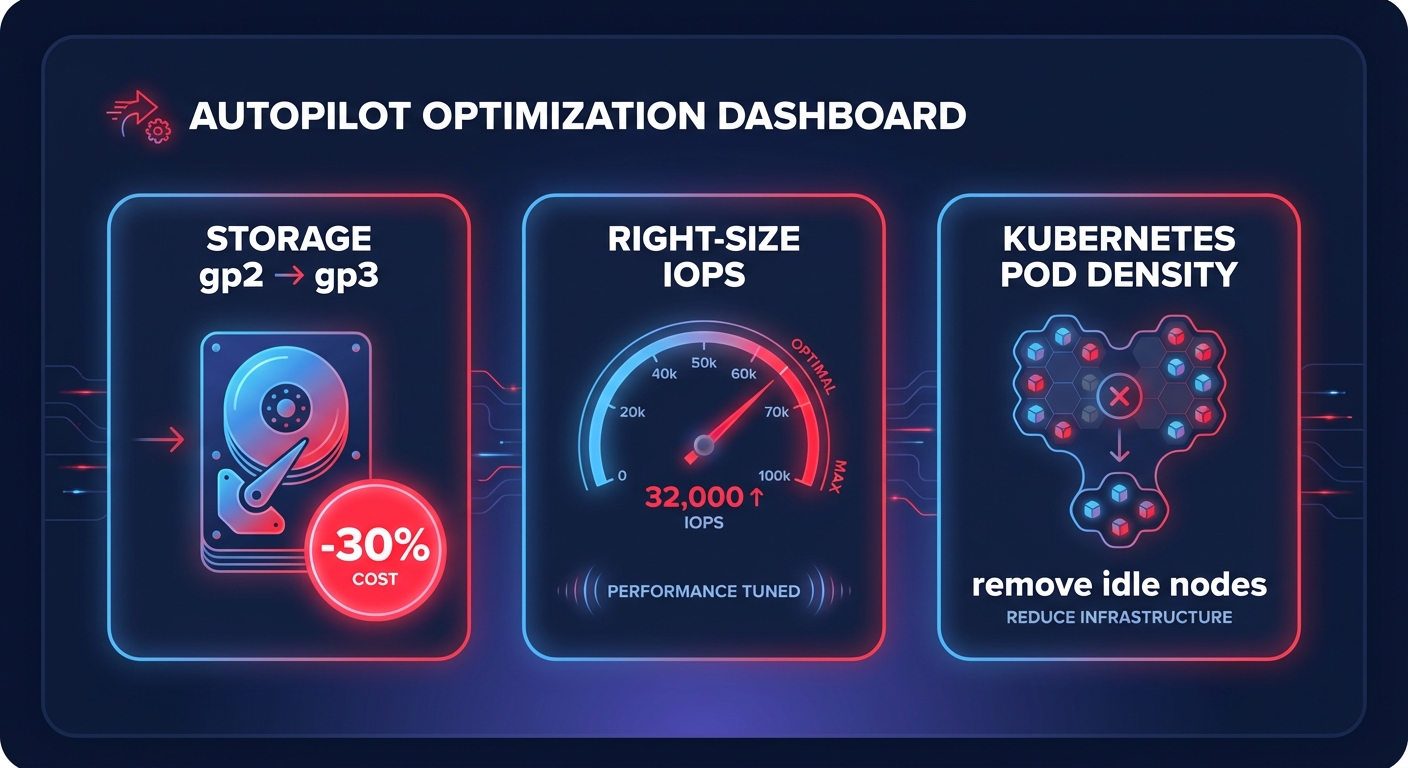

Optimizing storage and Kubernetes on autopilot

Compute is only one part of the equation; storage and container orchestration are often where the largest hidden costs reside. For example, migrating from gp2 to gp3 volumes can save you 20% to 30% on storage costs while maintaining or even improving performance. Many teams inadvertently provision high IOPS that are never utilized, creating significant waste. Effective cloud resource rightsizing tools should identify these gaps and adjust volumes dynamically.

In Elastic Kubernetes Service (EKS) environments, “container waste” is a common byproduct of over-provisioning nodes without granular pod-level visibility. To solve this, you need advanced cloud observability that allows you to optimize pod density and remove idle cluster capacity in real-time. By moving these storage and container adjustments to “autopilot,” your engineering team can focus on feature development rather than manual infrastructure tuning.

Achieving 40% savings with Hykell

The primary reason FinOps initiatives fail to reach their full potential is the reliance on busy engineers to manually click “resize” or “terminate.” Hykell removes this friction by operating as an automated extension of your engineering and finance teams. Our AWS rate optimization strategies utilize AI-powered commitment planning to blend Standard RIs, Convertible RIs, and Savings Plans. This approach maximizes your Effective Savings Rate (ESR) without locking you into the rigid, long-term agreements that often hinder infrastructure agility.

The Hykell performance-based pricing model is designed to align with your goals: we only take a slice of the savings we generate. If we don’t save you money, you don’t pay. This eliminates the financial risk typically associated with third-party SaaS tools. By combining real-time monitoring with automated execution, Hykell delivers real results for clients by handling the heavy lifting of cloud cost management silently in the background.

To see the impact on your own infrastructure, you can book a free AWS cost audit today. Discover how Hykell can cut your spend by up to 40% on autopilot while you maintain full control over your performance and scalability.