Are you overprovisioning “just in case” or starving your applications to hit aggressive budget targets? Most engineering leaders believe cost reduction and performance are a zero-sum game, but significant portions of the average AWS bill – often exceeding 30% – are pure waste. You can achieve efficiency without sacrificing speed.

The framework for systematic cost-performance balancing

Architecting for the cloud requires a constant negotiation between cost and performance. To move beyond guesswork, you must adopt a framework focused on “Effective Performance,” which involves achieving required AWS performance SLAs at the lowest possible price point. Organizations that implement strategic optimization techniques have historically achieved up to 80% savings on their AWS environments.

The most common obstacle to this balance is the “95% utilization trap.” This occurs when teams wait for absolute data certainty before purchasing commitments, effectively paying high on-demand rates while they analyze logs for weeks. Instead, a successful FinOps strategy treats cloud infrastructure as a dynamic portfolio. This requires continuous adjustment rather than a “set and forget” configuration, ensuring you are not leaving the 20% of potential savings on the table that most organizations lose due to poor commitment management.

Compute: moving beyond basic rightsizing

While AWS compute rightsizing typically yields 20-40% savings, true optimization goes deeper than simply downsizing an instance. You must also consider processor architecture, such as moving to AWS Graviton instances, which can deliver up to 40% better price-performance compared to x86-based instances. For many Linux-based workloads, this migration requires minimal effort while providing immediate gains in throughput and latency.

Furthermore, implementing EC2 auto scaling best practices ensures you are not paying for idle capacity during off-peak hours. By using target tracking policies to maintain a steady CPU utilization – typically between 60% and 70% – you can align resources with actual demand. Equally important is the purchasing model you select. You must blend Spot instances, Reserved Instances, and Savings Plans to cover both baseline and burst requirements. For example, some financial services companies have achieved a 43% cost reduction by using this hybrid approach while maintaining full flexibility for variable workloads.

Storage: achieving high throughput without the high cost

Storage performance is often the hidden bottleneck in AWS environments. Many teams default to gp2 volumes, but migrating to gp3 can reduce storage costs by approximately 20% while providing more predictable performance. These newer volumes allow you to decouple storage size from performance, meaning you can provision EBS throughput and IOPS independently. This ensures you only pay for the speed your application requires rather than being forced into oversized volumes just to hit a performance target.

Understanding Amazon EBS latency is also critical for mission-critical databases. If your monitoring shows high queue lengths, you may need the sub-millisecond latency of io2 Block Express, but for most web applications, a well-tuned gp3 volume is sufficient. For data-heavy applications, AWS S3 cost optimization relies heavily on S3 Intelligent-Tiering. This can automatically reduce costs by up to 70% for infrequently accessed data with zero impact on retrieval performance or application logic.

Databases and Kubernetes: managing complex workloads

For managed services like RDS and EKS, waste often hides in unattached volumes, idle replicas, or orphaned clusters. You should perform regular AWS Aurora performance tuning by rightsizing instances to avoid “disk thrashing” and excessive I/O costs. Utilizing RDS MySQL performance tuning techniques, such as read replicas, can offload the primary database, allowing you to use smaller and more cost-effective instance types for the main cluster.

In containerized environments, Kubernetes optimization on AWS requires granular, pod-level visibility. Without it, you cannot identify microservices that are over-requesting CPU or memory. By using tools like Karpenter for just-in-time node provisioning, teams have successfully reduced their overall node count by 15% or more. This prevents the common scenario where data planes run underutilized while still incurring significant EC2 and EBS charges.

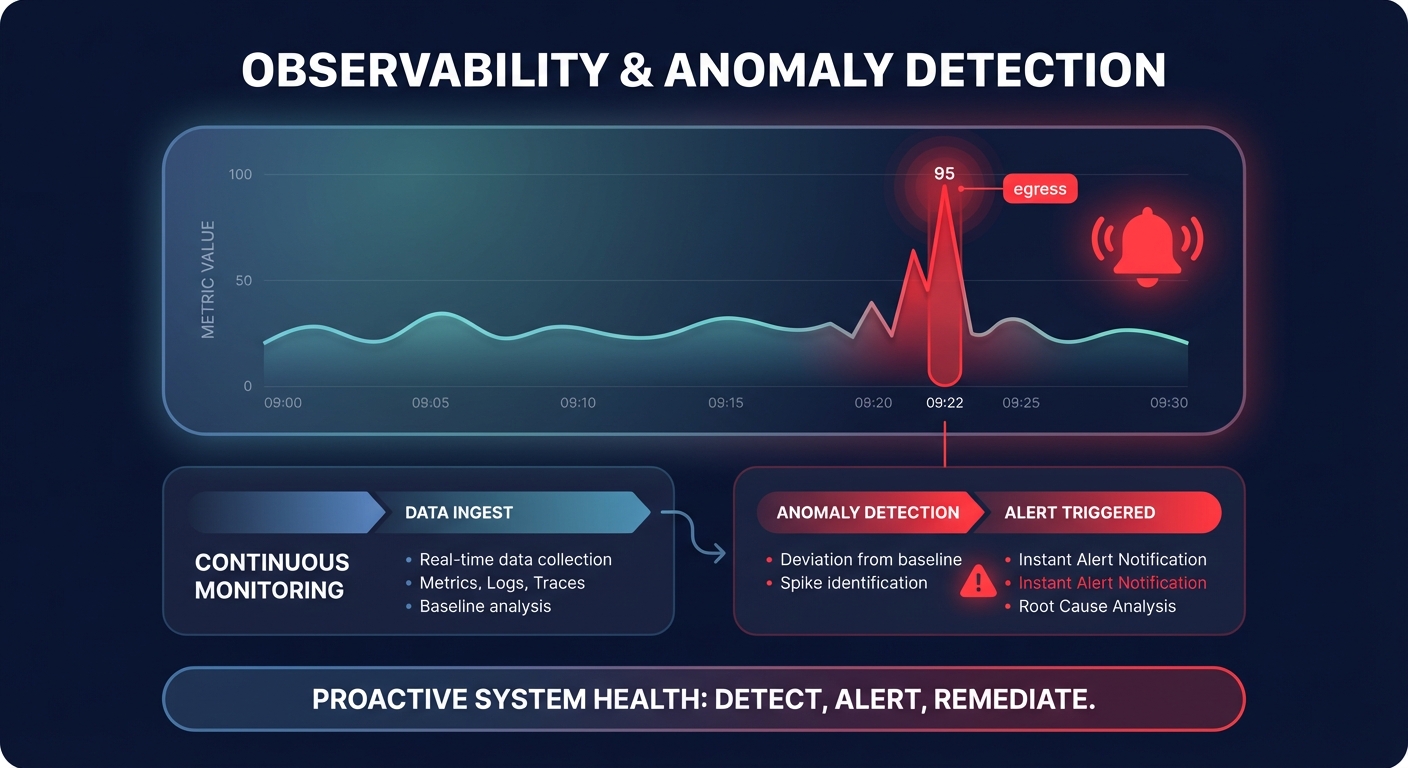

Observability: detecting anomalies before they scale

Effective cost-performance management is impossible without real-time data. AWS CloudWatch application monitoring provides the essential metrics – CPU, memory, and network I/O – necessary to identify over-provisioned resources. However, monitoring alone is insufficient for modern architectures. You must also implement AWS cost anomaly detection to catch misconfigured resources before they impact your budget.

A single runaway Lambda function or an unoptimized query can lead to thousands of dollars in AWS egress costs or CloudWatch logs charges before the next billing cycle. Logging can represent up to 30% of an entire monthly AWS bill for high-growth teams if ingestion and retention are not managed correctly. By establishing baseline egress rates and using automated alerts, you can pinpoint root causes – like a single microservice causing 80% of cross-region traffic – and remediate them immediately.

Putting your AWS optimization on autopilot

Manually managing AWS rate optimization and rightsizing consumes 10% to 15% of an engineering team’s monthly bandwidth. Hykell eliminates this burden by providing automated cloud cost optimization that runs in the background. The platform performs detailed cost audits to identify inefficiencies across EC2, EBS, and Kubernetes, identifying potential savings that often reach 40% of the total bill.

By using an AI-driven mix of discounts and automated infrastructure changes, Hykell typically reduces AWS spend without requiring code changes or degrading your performance SLAs. You do not have to choose between a fast application and a lean budget. By implementing systematic tradeoffs and leveraging automated tools, you can ensure your cloud infrastructure is always optimized for the current moment.

Stop leaving savings on the table. Schedule a free AWS cost audit with Hykell and see exactly where you can cut costs without touching performance.