Is your engineering team spending more time managing the orchestrator than the application code? Choosing between Amazon ECS and EKS is a high-stakes decision that dictates your operational overhead and long-term cloud budget for years to come.

Architectural foundations and the control plane divide

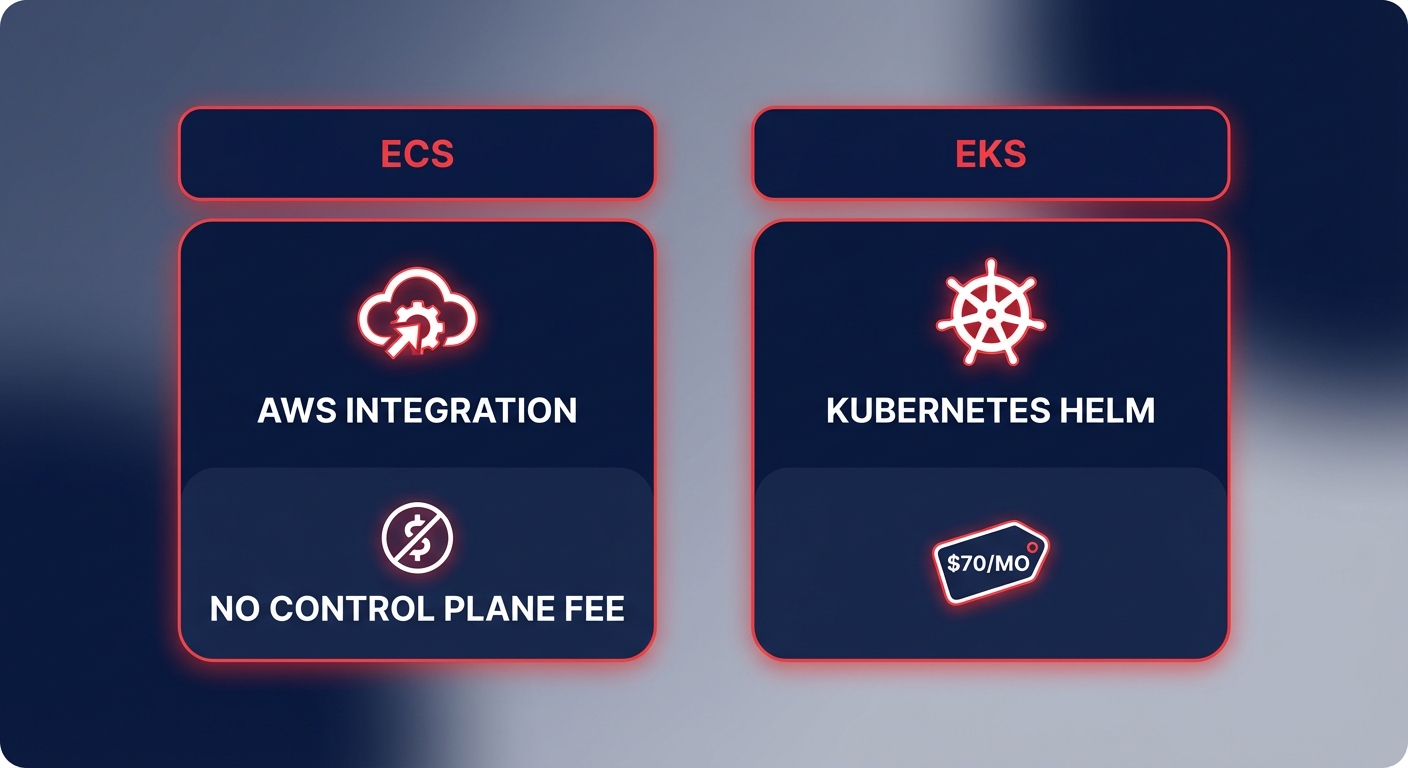

The primary difference between Amazon Elastic Container Service (ECS) and Amazon Elastic Kubernetes Service (EKS) lies in the abstraction layer. ECS is Amazon’s proprietary, opinionated orchestrator designed for simplicity and deep integration with the AWS ecosystem. It utilizes task definitions – JSON files describing the container runtime – and maintains the desired task count through CloudWatch metrics. Because ECS carries no control plane fees, it is frequently the most cost-effective starting point for smaller teams or stateless applications.

In contrast, EKS provides a managed Kubernetes control plane. While AWS handles the heavy lifting of the API server and the etcd cluster, you are still responsible for managing a complex Kubernetes environment. This includes handling pods, nodes, and sophisticated abstractions such as service meshes or advanced Kubernetes-native configurations. EKS incurs a flat $70/month fee per cluster, which, while negligible for large-scale production, can accumulate quickly in multi-account or multi-environment staging setups.

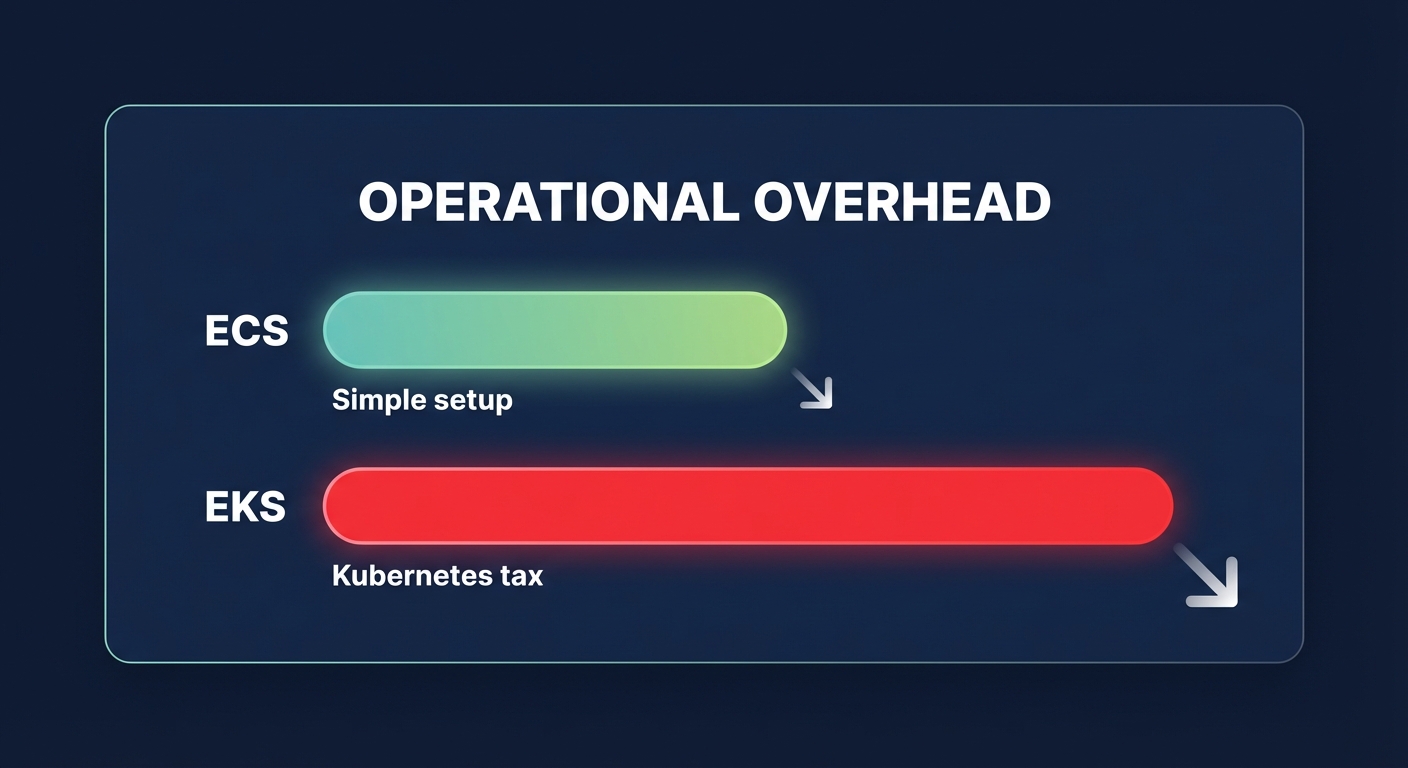

Operational complexity and the Kubernetes tax

For many engineering leaders, the decision boils down to operational overhead. ECS delivers a significantly faster time-to-market because it integrates natively with AWS IAM roles, security groups, and Elastic Load Balancers without requiring third-party plugins. You do not need to worry about etcd patching or networking plugin configurations; the platform handles the orchestration logic behind the scenes, allowing engineers to focus on shipping features rather than managing infrastructure.

EKS demands a higher level of specialized expertise. Your team must understand complex scheduling decisions, node affinity, pod disruption budgets, and how horizontal pod autoscaling cascades through the cluster. If your architecture requires advanced networking, GPU-intensive workloads for machine learning recommendation engines, or multi-cloud portability, this “Kubernetes tax” is often a justifiable investment. However, choosing EKS simply because it is an industry standard can lead to months of operational friction that ECS would have handled automatically.

Scaling strategies and cost impact

Both platforms support AWS Fargate, which eliminates EC2 management entirely, but the way they scale affects your bottom line differently. ECS uses target tracking scaling policies to maintain specific metrics, such as a 70% CPU utilization threshold. This approach helps avoid rapid “scale flapping” and keeps costs tightly aligned with actual demand. You can find more details in our guide on specific AWS Fargate cost optimization tips.

EKS employs a more complex two-tier model using the Horizontal Pod Autoscaler (HPA) for individual pods and tools like Karpenter or Cluster Autoscaler for the underlying nodes. Karpenter is particularly powerful for large-scale environments because it provisions the exact instance types needed based on workload requirements and architectural constraints, which is a core component of modern EKS cost management strategies.

To manage the financial impact of these compute resources, architects often leverage comprehensive AWS rate optimization strategies. Both platforms benefit from Compute Savings Plans, which offer up to 66% savings with the flexibility to move between Fargate, Lambda, and EC2. For fault-tolerant workloads, our Spot vs Reserved Instance guide highlights how Spot Instances can reduce compute costs by up to 90%, provided you have robust handling for the two-minute termination notice.

Comparing use cases for ECS and EKS

Teams should generally prioritize Amazon ECS when operational simplicity and a lean DevOps footprint are the primary objectives. It is particularly well-suited for stateless web services, straightforward APIs, and environments that do not require the overhead of a managed Kubernetes control plane. Architects who wish to implement containerized workloads without wrestling with complex YAML manifests or those who are fully committed to the AWS ecosystem for IAM and VPC integration will find ECS a more streamlined path.

Conversely, Amazon EKS becomes the logical choice for organizations that require advanced networking features like service meshes, distributed GPU scheduling for machine learning models, or the ability to port manifests across multi-cloud environments. If your microservices architecture relies on specialized Kubernetes tooling or requires fine-grained control over pod scheduling and networking policies, the additional operational investment in EKS is usually necessary to meet those technical requirements.

Maximizing efficiency with automated optimization

Regardless of the platform you choose, the primary source of waste is almost always over-provisioning. Research indicates that many teams leave 30% to 40% of their compute spend on the table by selecting oversized instances or failing to follow AWS EC2 cost optimization best practices. One of the most effective ways to lower the unit cost of either platform is to accelerate your Graviton gains. Migrating to ARM64 allows you to utilize AWS Graviton instances, which provide up to 40% better price-performance than comparable x86 instances.

Hykell removes the manual burden of these optimizations by continuously analyzing your ECS or EKS environment. We automatically implement right-sizing and manage your Savings Plans and Reserved Instances comparison to ensure you are always on the most efficient rate. Because Hykell operates on a performance-based model, you only pay a slice of the actual savings realized, providing a risk-free path to a leaner cloud bill.

Stop guessing your resource limits and let data drive your infrastructure decisions. You can explore our automated cloud optimization solutions today and see how Hykell can reduce your AWS costs by up to 40% on autopilot.