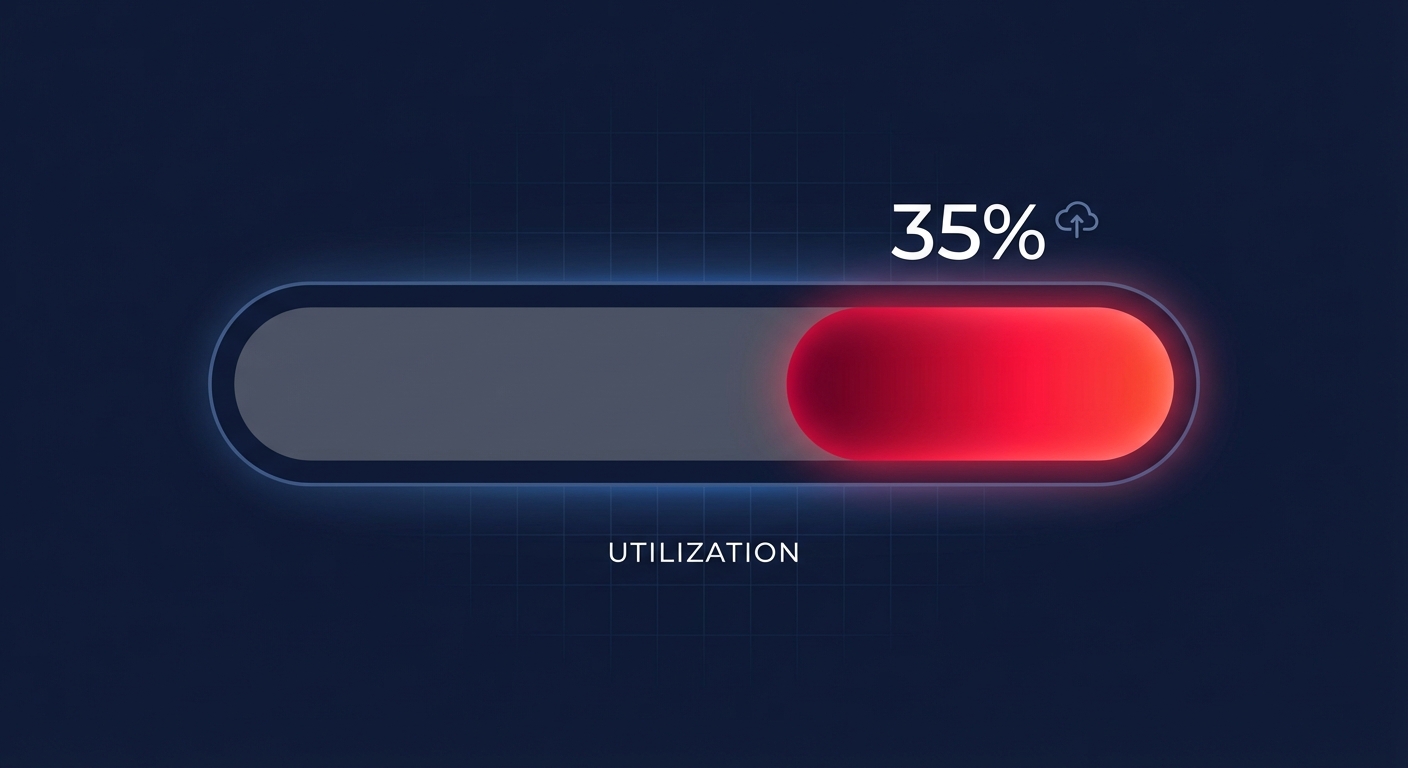

Are you paying for “ghost” capacity that your applications never touch? Most AWS environments operate at a meager 30–40% utilization, meaning two-thirds of your compute budget pays for idle silence.

Selecting the right EC2 instance family is the difference between a high-performance architecture and a bloated AWS bill. For mid-to-large enterprises, the choice usually centers on the 7th generation of compute-optimized (C7) or memory-optimized (R7) instances. Understanding the technical trade-offs between these families and the silicon powering them is the essential first step toward achieving automated AWS rightsizing.

The core trade-off: compute-heavy vs. memory-bound

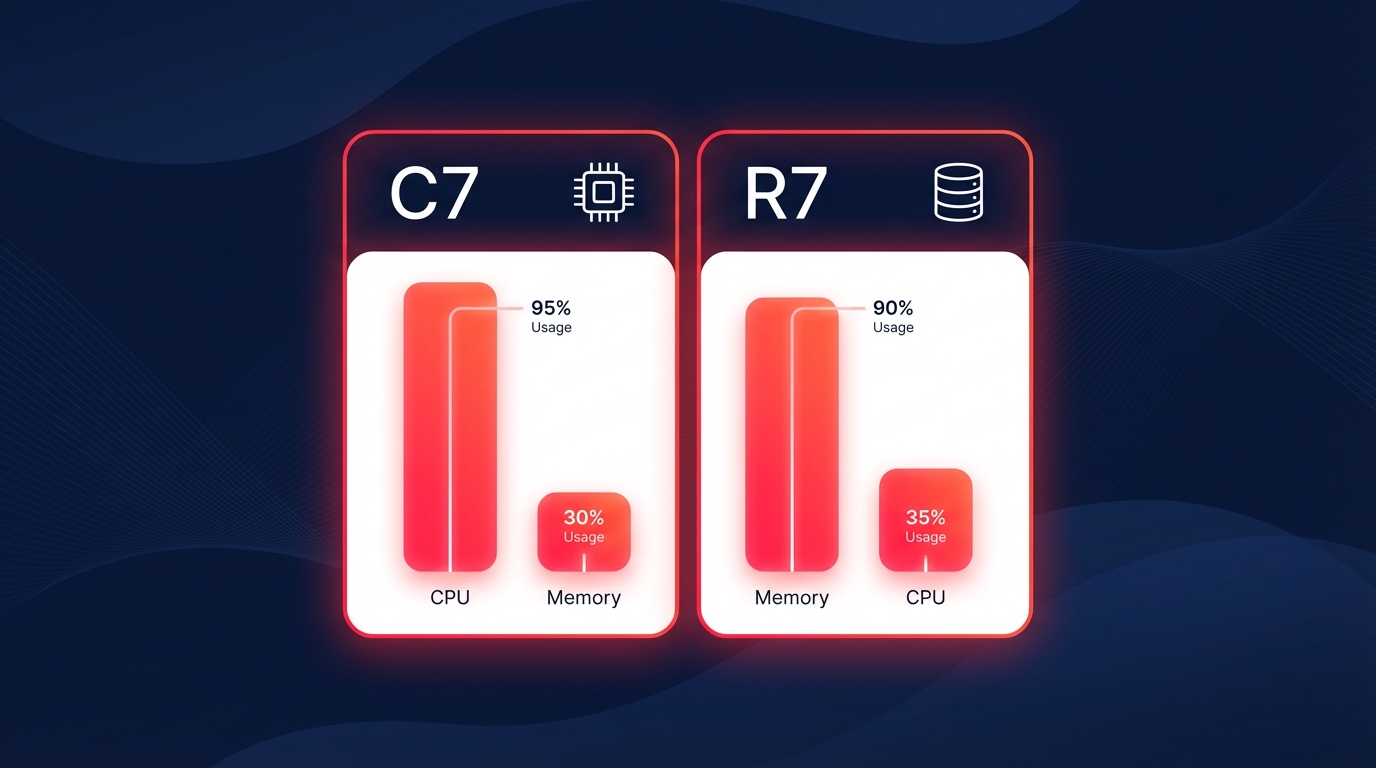

The fundamental difference between the C7 and R7 families lies in their resource ratios. Choosing incorrectly leads to either performance bottlenecks or expensive resources that remain unutilized. The C7 family serves as the workhorse for CPU-bound applications where raw processing power is the primary constraint. These instances excel in environments running high-performance computing (HPC), batch processing, and CPU-based machine learning inference. A standout in this family is the C7g variant, powered by AWS Graviton3 processors, which delivers up to 25% better performance than its predecessors. This family also provides 2x faster cryptographic performance, which is essential for accelerating SSL/TLS handshakes in secure web tiers.

Conversely, the R7 family is defined by an 8:1 memory-to-vCPU ratio, making it the industry standard for data-heavy operations. These instances are ideal for open-source databases like MySQL and PostgreSQL, as well as in-memory caches such as Redis or Memcached. By utilizing DDR5 memory, R7g instances provide 50% more bandwidth than DDR4, effectively reducing latency for financial modeling and large-scale big data analytics. The R7i variant further pushes boundaries by supporting up to 1,536 GiB of memory and 192 vCPUs, catering to the most demanding enterprise workloads.

Silicon matters: Graviton3 vs. Intel Sapphire Rapids

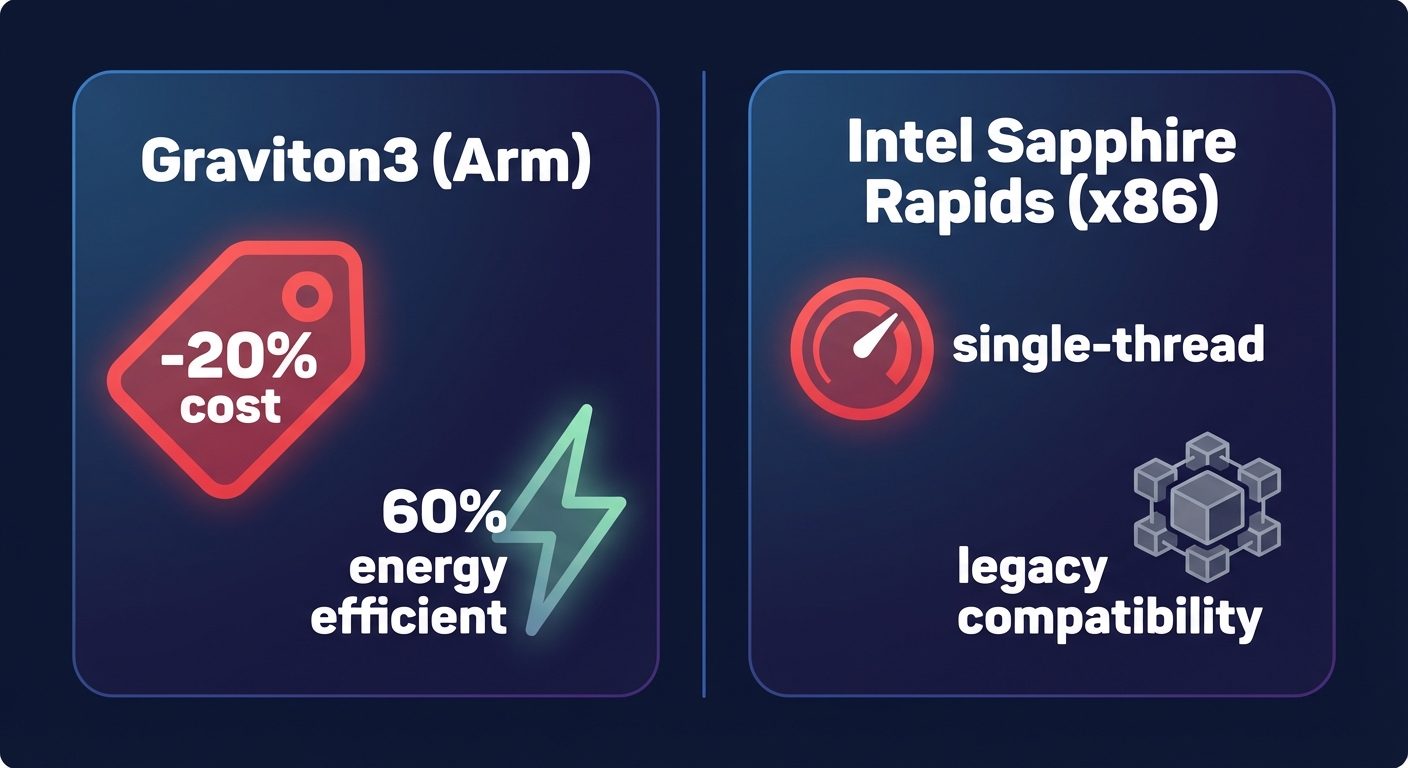

Choosing the underlying processor architecture significantly impacts your AWS instance cost. Graviton3 (C7g/R7g) chips offer the most aggressive price-performance in the cloud, delivering 20% lower costs than traditional x86-based instances. These Arm-based processors also support corporate ESG goals by operating with 60% higher energy efficiency than comparable EC2 instances.

While Graviton offers superior economics, Intel 4th Gen Xeon processors (C7i/R7i), known as Sapphire Rapids, remain essential for certain environments. These chips excel in single-threaded performance and are necessary for legacy x86-dependent software or proprietary binaries that lack Arm compatibility. They also feature Advanced Matrix Extensions (AMX) for specialized ML inference workloads that require hardware-level acceleration.

Pricing impact and generation efficiency

Upgrading from the 6th to the 7th generation is as much about infrastructure efficiency as it is about speed. Both the C7 and R7 families utilize the AWS Nitro System, which offloads management functions to dedicated hardware to ensure nearly all resources are available for your code. This architectural shift allows the 7th generation to offer massive networking and storage improvements. For example, R7i instances support up to 128 EBS volumes, a significant leap from the 28-volume limit found in the R6 generation.

Pricing varies by region and instance size, but the cost-efficiency gains are consistent. In the North Virginia region, a c7i.xlarge instance costs approximately $0.1785 per hour, while an r7g.xlarge costs $0.2142 per hour. For those requiring ultra-fast temporary storage, the C7gd and R7gd variants feature NVMe local SSDs that provide 45% better real-time performance than previous versions. These “d” variants are particularly effective for workloads requiring high-speed scratch storage for data processing or temporary files.

Why manual rightsizing is a losing battle

Identifying the perfect instance type is only half the challenge; the real difficulty lies in continuous EC2 performance tuning as traffic fluctuates. Manual rightsizing often fails because engineering teams tend to:

- Maintain 30–50% “safety buffers” to avoid on-call alerts, resulting in massive wasted spend.

- Lack the long-term data needed for accurate selection, which requires analyzing at least 2–4 weeks of P99 utilization data.

- Apply a Compute Savings Plan to the wrong instance family, locking in architectural inefficiencies for up to three years.

Without automated oversight, these inefficiencies compound, leading to an AWS bill that grows much faster than the actual application demand.

Optimize your EC2 selection with Hykell

Choosing between C7 and R7 does not have to be a manual research project for your engineering team. Hykell provides automated cloud cost optimization that identifies the exact instance family and size your workload requires. By continuously analyzing P99 CPU and memory utilization, our platform can transition your workloads to the most cost-efficient AWS Graviton instances or rightsize your existing x86 fleet on autopilot.

Our AI-driven rate optimization then layers on the best mix of Savings Plans and Reserved Instances to maximize your savings. We help mid-to-large businesses reduce AWS compute spend by up to 40% without compromising performance. Hykell operates on a transparent model where we only take a slice of the actual savings we generate for you.

See how much you can save on AWS today with a free cost audit.