Are you tired of Amazon EKS bills scaling faster than your user traffic? Migrating Kubernetes workloads to AWS Graviton can cut compute costs by up to 40% while boosting performance, provided you know how to navigate the shift from x86 to ARM architectures.

The architectural advantage: ARM vs. x86 in EKS

The fundamental difference between Graviton and traditional x86 instances, such as those from Intel or AMD, lies in how they handle compute cycles. Graviton processors map one vCPU to one physical core, whereas x86 instances typically use hyperthreading to present two virtual cores per physical core. This lack of resource contention at the hardware level means your comprehensive Kubernetes optimization on AWS results in more consistent tail latency and higher throughput for multi-threaded applications.

Beyond raw speed, Graviton instances are up to 60% more energy-efficient than their x86 counterparts. This efficiency translates directly into lower hourly rates, which are usually 18–20% less than comparable Intel Xeon instances. When you factor in the general cost-comparison between Graviton and x86 instances, many organizations find that a workload requiring 10 x86 instances only needs 6–8 Graviton nodes to maintain the same performance levels. Graviton3 instances specifically deliver up to 25% better compute performance and 50% faster memory access than the previous generation, making them ideal for memory-intensive in-memory databases and microservices.

Navigating the migration to mixed-architecture clusters

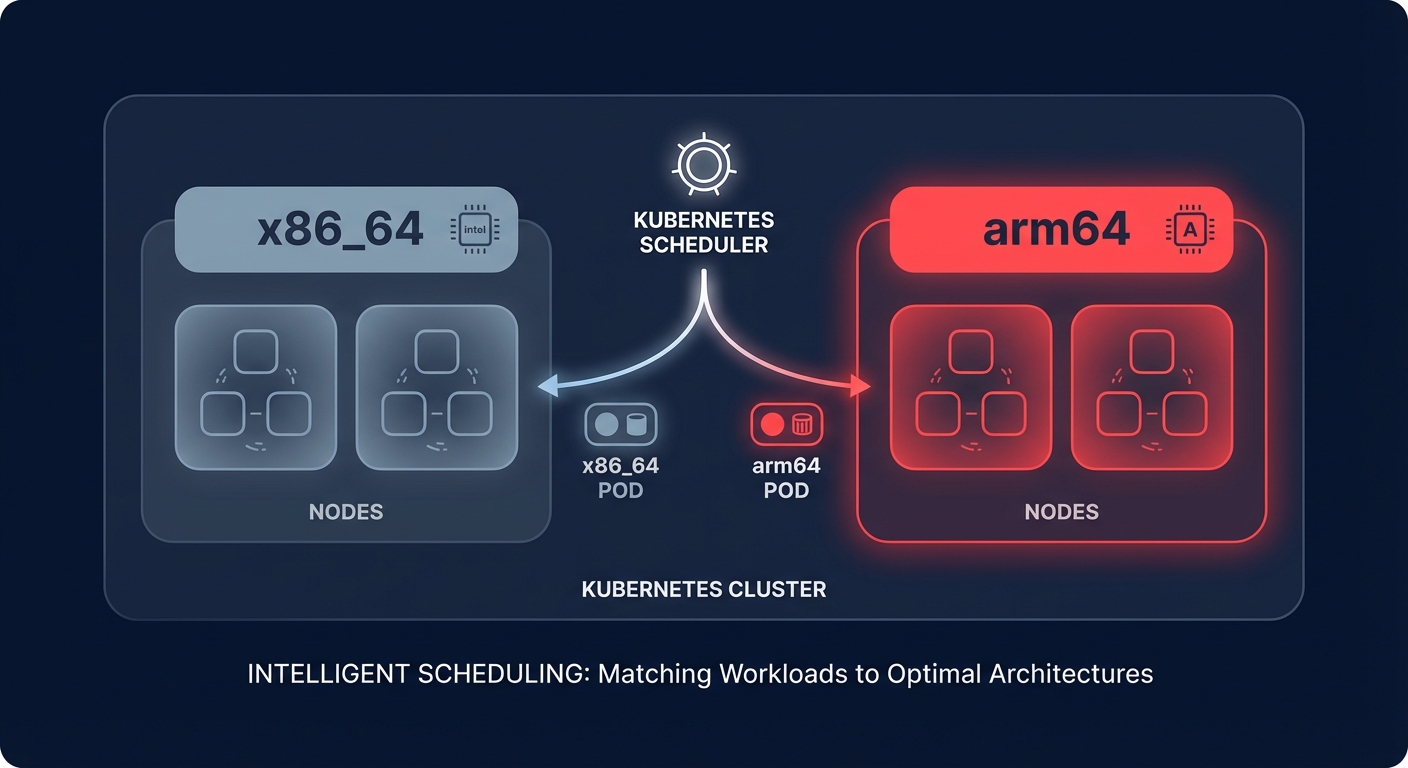

Migrating to Graviton does not require an all-or-nothing approach. AWS EKS allows you to run mixed-architecture clusters where ARM64 and x86_64 nodes coexist in the same data plane. This setup is ideal for phased rollouts or for supporting legacy components that lack ARM compatibility, ensuring you can accelerate your Graviton gains without re-architecting your entire stack from day one.

To start, you must ensure your CI/CD pipeline produces multi-architecture container images. Using tools like Docker Buildx or AWS CodeCatalyst, you can push a single manifest to Amazon ECR that includes both architecture versions. When a pod is scheduled, the kubelet automatically pulls the image layer that matches the node architecture. Hykell’s migration acceleration often identifies these ready workloads automatically, allowing your team to focus on deployment rather than manual compatibility audits.

Advanced scheduling and autoscaling strategies

In a mixed-architecture environment, scheduling becomes more complex. You must ensure that ARM-compiled pods do not land on x86 nodes, which would cause immediate errors such as “CrashLoopBackOff.” You can manage this using node affinity or node selectors to explicitly target the `arm64` architecture.

“`yaml

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/arch

operator: In

values:

- arm64

“`

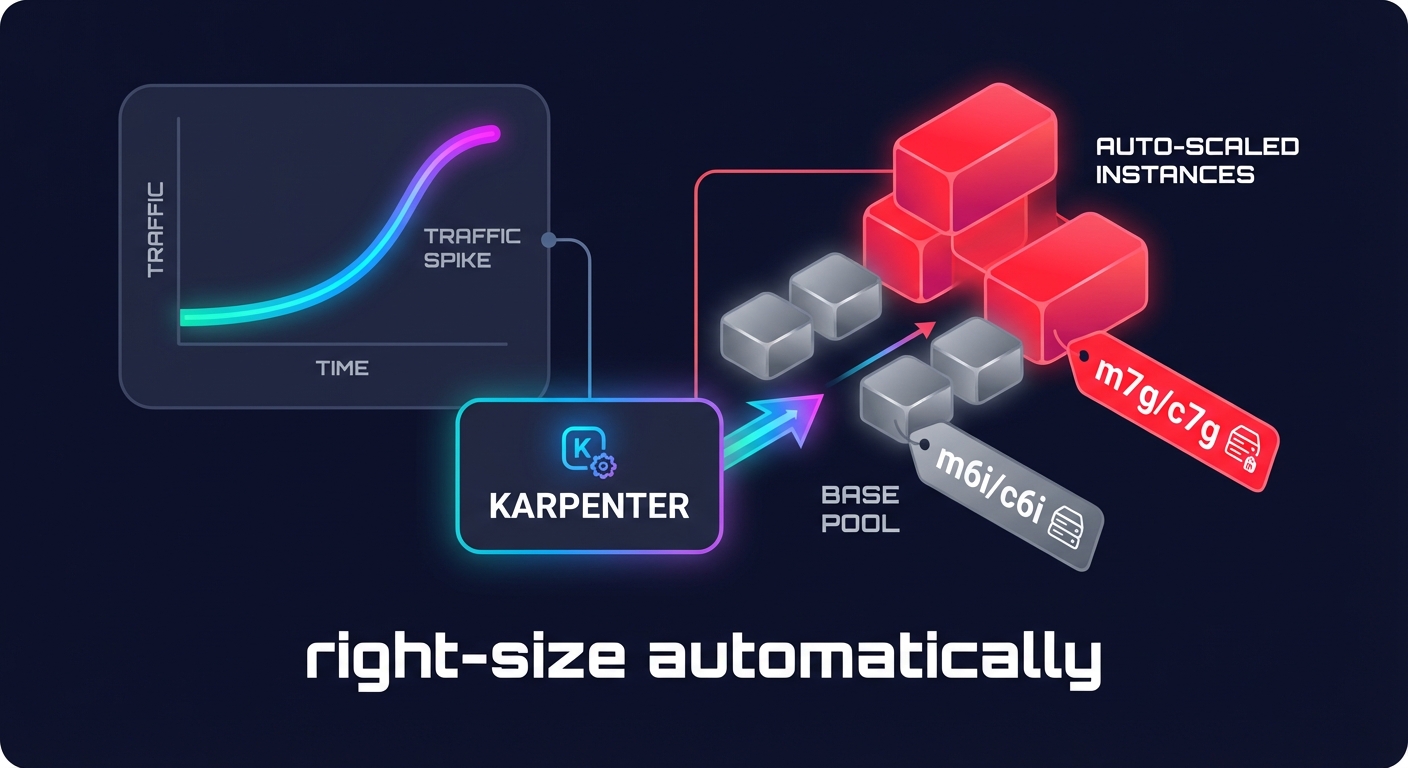

For teams looking to optimize at scale, Karpenter for AWS Kubernetes scaling is often superior to the standard Cluster Autoscaler. Karpenter can dynamically provision the most cost-effective instance type based on pending pod requirements, seamlessly mixing Graviton families like m7g or c7g with x86 families like m6i or c6i to meet demand. This flexibility ensures you are not overpaying for Intel instances when cheaper Graviton capacity is available. Furthermore, Karpenter is designed to be faster and more flexible than traditional Auto-Scaling Groups, provisioning new nodes immediately for pending pods to reduce scaling lag.

Benchmarking for performance and cost

You should never assume that Graviton will be faster for every single microservice. While Java, Python, Go, and Node.js applications typically see significant improvements in ARM vs x86 pricing and performance, some single-threaded legacy applications or those relying on specific Intel instruction sets like AVX-512 might perform better on x86. Effective cloud performance benchmarking involves running side-by-side tests in a staging environment to assess efficiency and cost-effectiveness.

Monitoring metrics such as request latency, CPU utilization per request, and memory bandwidth is essential for a successful transition. If you find a microservice is 20% slower on ARM, the 20% cost saving might be negated, making it a poor candidate for migration. Hykell’s observability tools provide real-time tracking of these anomalies, ensuring that your optimization efforts actually improve your bottom line rather than just shifting costs between resource categories.

Putting EKS optimization on autopilot

Managing the nuances of instance types, commitment strategies, and Kubernetes scaling is a constant engineering effort. Many teams leave 20–40% of potential savings on the table because they cannot manually adjust for every shift in traffic or AWS pricing update. Automated solutions have become the standard for maintaining high performance-to-cost ratios without exhausting your internal DevOps resources.

Hykell solves this by automating the AWS rate optimization process and infrastructure tuning. We analyze your EKS usage patterns in real-time, identifying the best candidates for Graviton migration and automatically adjusting your commitment portfolio, including Savings Plans and Reserved Instances, to cover both architectures. This ensures you get the highest possible Effective Savings Rate (ESR) without the risk of long-term vendor lock-in or paying for unused capacity.

By combining the superior price-performance of Graviton with automated cloud cost optimization from Hykell, you can achieve deep savings while your engineers focus on building features rather than managing infrastructure. If you are ready to see how much you could save on your EKS clusters, get a free cost assessment today.