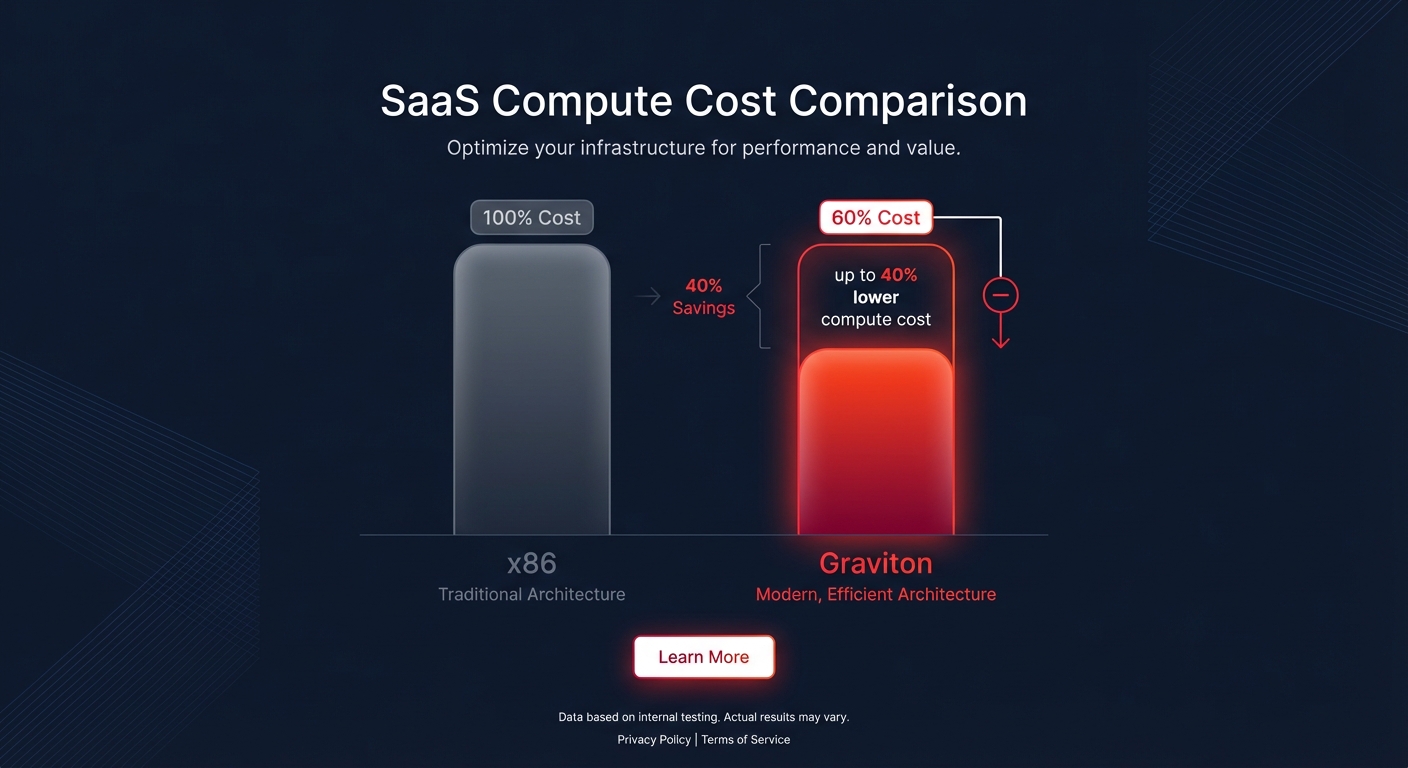

Are you still paying the “x86 tax” on your AWS bill? While Intel and AMD have dominated the data center for decades, AWS Graviton processors now deliver up to 40% better price-performance for modern, cloud-native workloads.

The architecture gap: ARM vs. x86

The performance difference between architectures becomes clear when analyzing ARM vs x86 pricing models and hardware designs. Traditional x86 instances typically rely on hyperthreading to present two virtual cores (vCPUs) for every one physical core. In contrast, Graviton maps a single vCPU to one physical core. This 1:1 mapping effectively eliminates resource contention between threads, resulting in more predictable throughput and performance for your multi-threaded applications.

Because AWS custom-builds these processors using ARM64 architecture, they operate with superior energy efficiency, consuming up to 60% less power than comparable x86 counterparts. This efficiency translates directly into lower instance costs. In practice, a cost comparison between Graviton and Intel instances often reveals that you can replace ten x86-based instances with just six to eight Graviton instances without any loss in performance, immediately reducing your total infrastructure footprint.

Performance and cost trade-offs

When evaluating the switch, you should consider the generational gains available within the Graviton family. Graviton3 delivers up to 25% better price-performance than its predecessor, Graviton2. If you are upgrading from aging x86 C5 instances to the latest Graviton C7g, you can achieve a 25% performance boost while simultaneously realizing a 30% reduction in compute costs.

The technical benefits extend to memory-intensive workloads as well. Graviton3 provides a massive memory bandwidth of 115-120 GB/s, which dwarfs the 60-70 GB/s offered by Intel Xeon and the 80-90 GB/s of AMD EPYC. These specifications make ARM-based instances ideal for in-memory databases and heavy data processing. Furthermore, for organizations running large Java applications, Graviton4 is up to 45% faster than previous generations. When you pair this hardware with high-performance runtimes like Azul Platform Prime, performance can jump another 30% over standard OpenJDK.

Despite these gains, x86 remains relevant for specific use cases where its higher clock speeds provide an advantage. Single-threaded workloads sometimes perform 6-14% better on Intel or AMD instances. Additionally, if your environment depends on Windows Server or proprietary Intel instruction sets, migrating may not be feasible. However, for the majority of Linux-based, cloud-native applications, the range of AWS Graviton instance types offers a significant economic and performance advantage.

Evaluating software compatibility and migration paths

Before you start migrating applications to Graviton instances, you must audit your stack for ARM64 compatibility. Modern interpreted languages like Python, Node.js, and Ruby generally work without modification. However, compiled languages such as Go, Rust, or C++ will require recompilation for the ARM architecture to run natively.

Most major Linux distributions, including Amazon Linux 2, Ubuntu, and RHEL, provide full ARM64 support. In a containerized ecosystem, you can often simplify the transition by updating your CI/CD pipelines to build multi-architecture Docker images using tools like `docker buildx`. You should also keep an eye out for potential multi-architecture support challenges, particularly when dealing with third-party monitoring agents, security scanners, or legacy drivers that may not yet have ARM versions available.

Selecting the right Graviton instance family

Choosing the correct family is essential for effective AWS EC2 cost optimization. For general-purpose workloads like web servers and microservices, the M7g family provides a balanced mix of compute and memory resources. If your applications are CPU-bound – such as high-performance computing (HPC), machine learning inference, or video encoding – the C7g family is the better choice.

For memory-optimized needs, the R7g family excels at running open-source databases like PostgreSQL or caches like Redis. If you are managing development environments or low-traffic applications, the burstable T4g family remains the most budget-friendly option. Consulting an EC2 instance type selection guide can help you identify which of your current x86 instances are over-provisioned and ready for a more efficient Graviton swap.

Accelerating your transition with Hykell

A typical manual migration can take between 9 and 12 weeks of engineering time, a cost that often eats into your projected savings. Hykell helps you accelerate your Graviton gains by using an automated platform to identify the best migration candidates based on your real-world usage patterns. This data-driven approach removes the guesswork, helping you prioritize the workloads that offer the highest return on investment.

Our cloud observability tools provide you with real-time visibility into your Effective Savings Rate (ESR), allowing you to validate performance improvements and cost reductions the moment you make the switch. Furthermore, Hykell integrates these changes with automated AWS rate optimization, ensuring your Savings Plans and Reserved Instances automatically cover your new ARM-based fleet without requiring any manual management from your DevOps team.

By combining right-sizing with generational upgrades and continuous monitoring, you can finally capture that elusive 40% reduction in cloud spend. The best part is that the Hykell pricing model is entirely success-based – we only take a slice of what we save you. If we do not reduce your bill, you do not pay. Start your automated cost audit today and stop overpaying for compute.