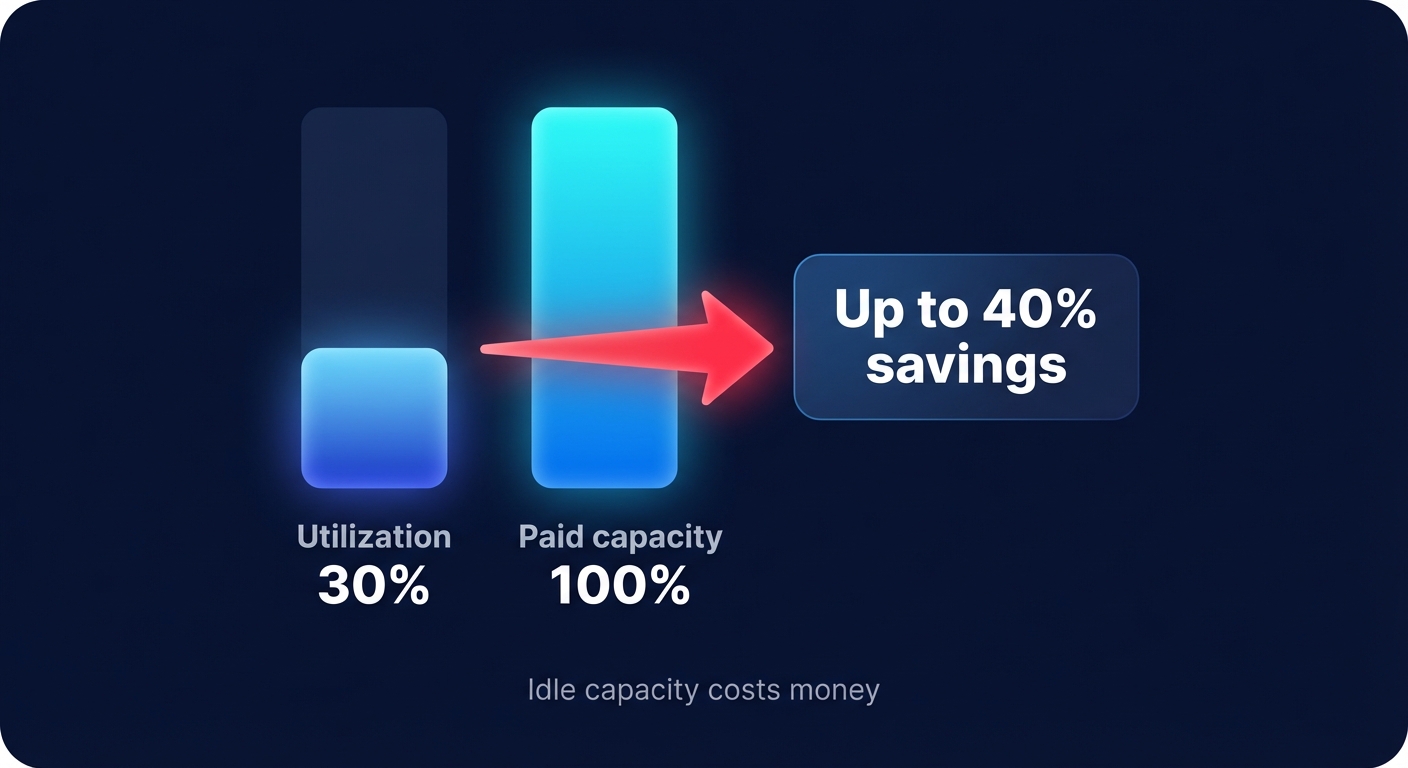

Did you know the average AWS environment runs at just 30% utilization while you pay full price for idle capacity? Most engineering leaders leave up to 40% of their cloud budget on the table because manual discount management is too complex to maintain at scale.

The difference between rate and workload optimization

To master cloud efficiency, you must pull two distinct levers: workload optimization and rate optimization. Workload optimization focuses on automated AWS rightsizing, which is the process of matching your instance types to actual demand and deleting “zombie” resources like unattached EBS volumes. While critical for operational health, rightsizing alone often leaves significant money on the table because it does not address the underlying unit price of the infrastructure.

AWS rate optimization addresses the price you pay for the resources you have already decided to run. By strategically layering Reserved Instances (RIs), Savings Plans, and Spot Instances, you can lower your unit costs by up to 72% for compute and 90% for fault-tolerant workloads. The most efficient organizations integrate rate and workload optimization into a single strategy, achieving double-digit savings without ever touching their application code or disrupting engineering workflows.

Maximizing compute discounts through RIs and Savings Plans

The backbone of rate optimization is moving away from the “on-demand” pricing trap. AWS provides several commitment-based models, but choosing between them requires a careful balance of flexibility and discount depth. Understanding the differences between Savings Plans and Reserved Instances is essential for any FinOps strategy.

Savings Plans are the modern standard for most teams, offering up to 72% savings in exchange for a dollar-per-hour commitment. Compute Savings Plans provide the most flexibility, applying automatically across EC2, Fargate, and Lambda regardless of region or instance family. If your architecture is stable and you are certain of your instance family, EC2 Instance Savings Plans can offer deeper discounts for that specific family within a region.

Reserved Instances remain relevant for specific use cases, particularly when you need zonal capacity reservations to guarantee availability during peak events. Standard RIs can deliver up to 75% savings for specific SKUs but lock you into a fixed instance type and region. If your architecture is still evolving, Convertible RIs allow you to change instance families as your needs shift, though they typically offer a lower discount rate than Standard RIs.

Leveraging Spot Instances for fault-tolerant workloads

For non-production environments, batch processing, or CI/CD pipelines, Spot Instances are the ultimate rate optimization tool. By utilizing spare AWS capacity, you can access discounts of up to 90% off on-demand prices. This model is ideal for stateless or interruptible tasks that can handle the two-minute termination notice AWS provides when it needs the capacity back.

To maintain performance while using Spot, you should architect for fault tolerance using fleets that span multiple instance types and Availability Zones. When you combine a baseline of Savings Plans for steady-state traffic with a hybrid Spot strategy for background tasks, you can drive your EC2 cost-to-performance ratio to its absolute minimum without sacrificing the reliability of your core services.

Storage and architectural rate levers

Rate optimization extends beyond virtual machines into your data and processor layers. Storage costs often account for 25–30% of a total cloud bill, yet they are frequently overlooked because they seem fixed. Migrating from gp2 to gp3 EBS volumes is one of the fastest ways to lower your monthly spend, offering identical performance at a 20% lower cost.

For object storage, implementing Amazon S3 Intelligent-Tiering can automate the movement of data to infrequent access tiers, cutting storage bills by as much as 50% for data with shifting access patterns. Beyond storage, adopting AWS Graviton processors provides an architectural advantage. These ARM-based processors offer up to 40% better price-performance than comparable x86 instances. When you stack Graviton’s lower base price with commitment-based Savings Plans, your effective savings rate compounds, creating a highly efficient infrastructure.

Why manual rate optimization fails at scale

Most engineering teams struggle to maintain high discount coverage because cloud environments are too dynamic for manual oversight. A commitment that makes sense today may become an “orphaned” expense next month if developers migrate a workload to a different instance family or a serverless architecture. While manual audits using AWS Trusted Advisor or the AWS Cost Optimization Hub provide helpful snapshots, they do not solve the ongoing risk of overcommitment.

Engineers often respond to this complexity by keeping a 30–50% “safety buffer” of on-demand spend. This buffer is essentially a waste tax paid to avoid being locked into the wrong resources. This cautious approach prevents you from capturing the full value of the discounts you are eligible for, slowing down your ability to reinvest those savings into innovation.

Putting AWS optimization on autopilot with Hykell

Hykell removes the friction of manual management by automating the entire rate optimization lifecycle. Instead of your team spending weeks analyzing CloudWatch logs and pricing to forecast usage, the Hykell platform continuously monitors your environment to buy, exchange, and adjust commitments in real-time. This ensures your Effective Savings Rate (ESR) stays optimized even as your infrastructure evolves.

Because Hykell operates at the billing and cost dataset level, you receive the benefits of a fully optimized portfolio with zero code changes and zero engineering effort. The platform dynamically manages the mix of Savings Plans and RIs, often doubling the savings of teams who attempt to manage their spend manually. You retain full control while Hykell’s automation works in the background to eliminate the “on-demand” premium.

With a customizable observability dashboard, you can track every dollar saved and allocate costs across business units with precision. Hykell’s performance-based pricing model ensures that our incentives are aligned with yours; we only take a slice of the actual savings realized. If we don’t save you money, you don’t pay. Stop overpaying for idle capacity and start reclaiming your budget for the projects that drive growth. Book a free AWS cost audit with Hykell today to uncover your hidden savings.